Monocular Camera &UWB Low-cost and low-power mobile robot positioning scheme VR-SLAM Fast recovery tracking failure No closed loop, easy access to global maps NTU, Nanyang Technological University

VIRAL SLAM:Tightly Coupled Camera-IMU-UWB-Lidar SLAM

- Unlike visual odometry (VO), UWB distance measurement is drift-free and unaffected by visual conditions; Unlike GPS, UWB can be used both indoors and outdoors; UWB sensors are smaller, lighter, more affordable than lidar, and simpler to install on robotic platforms.

- UWB can also be used as a communication network in multi-robot situations, a variable baseline between two robots, or inter-robot constraints for pose map optimization or formation control.

- UWB sensors, on the other hand, require good line-of-sight (LoS) for accurate measurements, cannot provide any perceived information about the environment, and the performance of distance-based positioning methods relies on installing enough UWB anchor points and their configuration does not fall into a degrading situation.

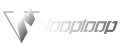

- First, a UWB-assisted 7-degree-of-freedom (scale factor, 3D position, and 3D orientation) global alignment module is introduced to initialize the visual odometer (VO) system in the world coordinate system defined by the UWB anchor point. This module loosely fuses scaled VO and ranging data using quadratic constrained quadratic programming (QCQP) or nonlinear least squares (NLS) algorithms, depending on whether there is a good initial guess.

-

Secondly, an accompanying theoretical analysis is provided, including the derivation and interpretation of the Fisher Information Matrix (FIM) and its determinants. -

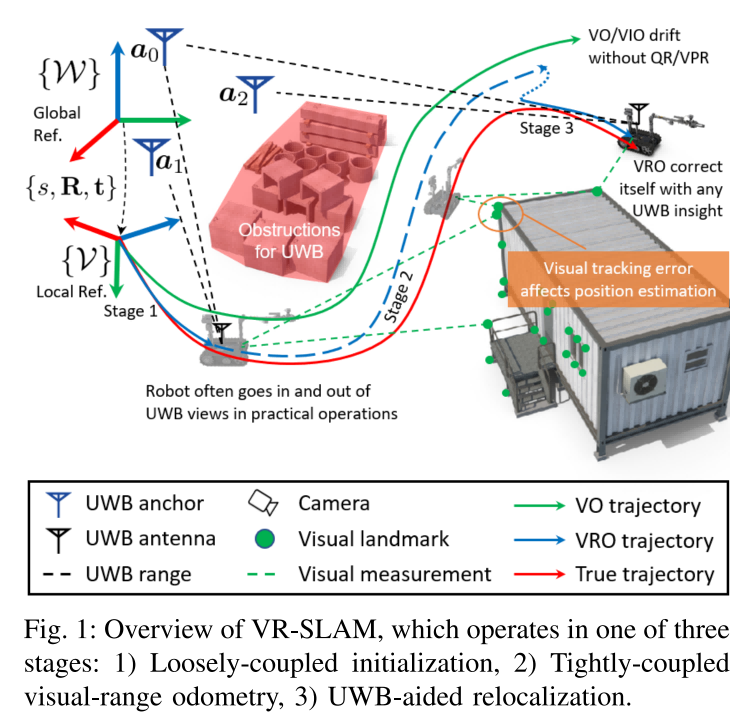

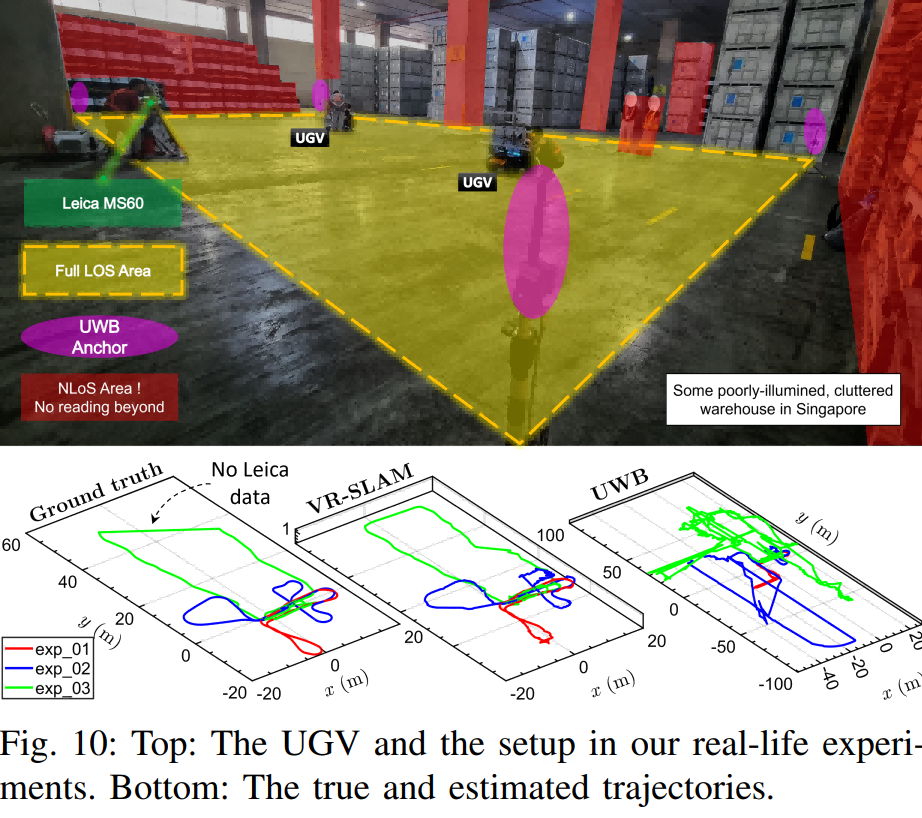

Finally, UWB-assisted beam adjustment (UBA) and UWB-assisted attitude map optimization (UPGO) modules are proposed to improve short-term odometer accuracy, reduce long-term drift, and correct any alignment and scale errors. Extensive simulations and experiments show that our solution is superior to using only UWB/camera and previous methods, can quickly resume tracking failures without relying on visual relocation, and can easily acquire global maps, even without a closed loop.

VIRAL-Fusion: A Visual-Inertial-Ranging-Lidar Sensor Fusion Approach

Resources

-

Material provided by Dr. Yuansheng Hai

-

https://arxiv.org/pdf/2303.10903.pdf